The Dawn of AI Agents in Healthcare Technology

The emergence of AI agents represents a pivotal shift in healthcare technology, with significant implications for efficiency and data management. A prime example is LlamaIndex, a startup founded by former Uber scientists Jerry Liu and Simon Suo in 2023, that has recently unveiled its cloud service for developing unstructured data agents. This innovative platform allows healthcare IT professionals and providers to leverage AI for processing unstructured data, which can drastically enhance the accessibility and use of vital information in clinical settings.

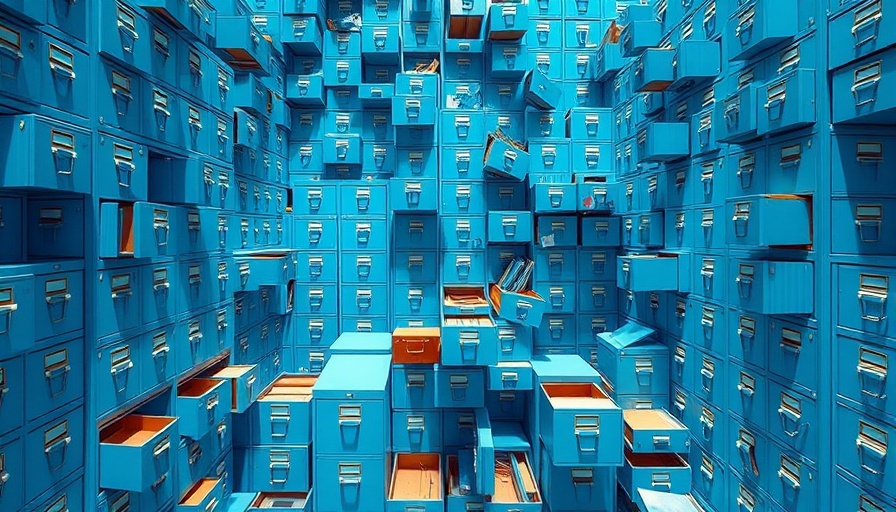

Transforming Unstructured Data into Actionable Insights

LlamaIndex capitalizes on the growing need for advanced tools that can efficiently transform unstructured data—such as clinical notes, imaging reports, and patient records—into structured formats. Utilizing its open-source software, developers can create custom agents capable of extracting critical information, generating insightful reports, and performing autonomous tasks. By implementing such capabilities, healthcare organizations can improve decision-making processes and enhance patient outcomes.

Streamlined Integration with Existing Healthcare Systems

What sets LlamaIndex apart in the crowded market of AI solutions is its ability to seamlessly integrate with existing healthcare data sources, including platforms like Notion and Slack, as well as traditional file formats like PDFs. This integration ensures that healthcare IT professionals can build agents that interact with their current systems without needing to overhaul their infrastructure, thereby minimizing downtime and adjustment periods. As noted by Liu, “this framework alleviates significant pain points that hinder the deployment of AI agents in production environments.”

Funding and Future Prospects

Backed by a recent $19 million Series A funding round, LlamaIndex is poised for substantial growth. The financial boost, led by Norwest Venture Partners, will facilitate the expansion of its development team and enhance product capabilities. With healthcare providers increasingly recognizing the importance of tailored AI solutions, LlamaIndex aims to solidify its position as a thought leader in the healthcare AI space.

Why Healthcare Providers Should Pay Attention

As LlamaIndex continues to evolve, healthcare administrators and providers must take note of the potential advantages AI agents offer. From reducing operational inefficiencies to enhancing patient care, the capabilities of these technologies present an essential tool for modern healthcare. For those working in healthcare IT, the ability to transform unstructured data into actionable insights will not only streamline workflows but also drive better health outcomes across the board.

Add Row

Add Row  Add

Add

Write A Comment